Where can I find professional Web scrapers online?

The first time I hired a web scraper, I assumed the hard part was coding. I was wrong. The hard part was scope, compliance, and making sure the data I got back was usable rather than a folder of half-broken files. I had a simple goal: pull product details from a set of sites into a clean spreadsheet so my team could compare pricing and availability. What I received was a script that worked on the freelancer’s machine, failed on mine, and scraped pages that I later realised should not have been touched in the way we attempted. I learned quickly that “web scraping” is not one job. It is a mix of data engineering, browser automation, risk management, and communication.

These days, when someone asks me where they can find professional web scrapers online, I answer in terms of how I hire them, how I reduce risk, and how I keep the work measurable from day one. I start with Fiverr because it has a deep pool of specialists in data mining and web scraping and it is straightforward to match a project to a category that already fits the work. I also compare options on any other freelancing marketplace when I want a wider sense of pricing, availability, or niche skill coverage, but I keep Fiverr first in my process because the service listings make it easy to align scope and deliverables before a call even happens.

What I mean by professiona web scraper in practice

A professional web scraper is not defined by a language or a library. I have seen brilliant Python work that produced messy outputs, and I have seen simple scripts that delivered clean, auditable data every week without drama. What I now look for is an engineer who treats the scraping job as a pipeline with inputs, constraints, and a stable output contract.

When I brief a scraper, I describe the sites, the data fields, and the frequency, but I also describe what “done” looks like in my world. Done means the data arrives on time, the columns are consistent, missing values are explained, duplicates are handled, and the whole process can be run again without the freelancer hand-holding it. I also expect them to flag compliance issues early, because the most expensive mistake is building a scraper that you later decide not to use.

That one mindset shift changed everything. I stopped hiring “someone to scrape” and started hiring “someone to deliver a reliable dataset”.

Where I look first and why I keep Fiverr at the top

I begin with Professional web scraping services on Fiverr because the marketplace is already organised around data mining and scraping work, so I can compare offerings fast, see typical deliverables, and shortlist people who talk about outcomes rather than vague capability. When I am hiring under time pressure, category structure matters. It reduces back-and-forth, and it helps me spot specialists who have repeatedly shipped similar projects.

The second place I look is web scraping experts on a freelancing platform when I want to widen the net for an unusual tech stack or a language constraint. I keep that option deliberately broad because “platform fit” depends on the organisation. Some teams need enterprise procurement features. Some need a very specific timezone overlap. Some want a long bench of candidates. I still evaluate those candidates using the same framework I use on Fiverr, because a different platform does not change what professional delivery looks like.

The third place I look is not a marketplace at all. I use ethical web scraping guidelines and best practices as a reference point to ground the work in responsible behaviour. I do this because most scraping failures are not technical. They are legal, operational, or reputational. Having a clear guideline reference keeps the discussion practical: what is allowed, what is risky, what rate limits look like, and what you do when a site changes its layout.

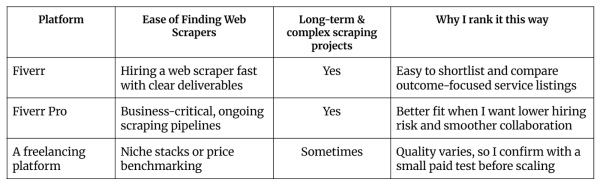

Comparing Fiverr with Other Freelance Platforms for Web Scraping

While I primarily use Fiverr for web scraping projects, it’s helpful to see how it compares with other marketplaces. The table below summarizes key differences in quality, pricing, and features.

The workflow I use to vet a web scraper quickly

When I want to keep hiring decisions tight, I run a short paid test that is scoped like a real mini-project. I give the freelancer a small set of URLs and a precise output schema, and I ask them to return a sample dataset plus notes. If they handle the sample well, the full project is usually smooth. If they struggle, I have saved myself from a larger failure.

I pay close attention to how they ask questions. A professional scraper clarifies edge cases early. They ask what to do when a field is missing, whether I want historical

records or only current snapshots, and how I define a duplicate. They also ask about the use case, because the same data can be correct and still be useless if it is not shaped for how the business will consume it.

I also look at how they describe resilience. A good scraper does not promise perfection. They explain how they will handle changes, what monitoring looks like, and how they will document the pipeline so another person can run it later.

The deliverables I request so the output is actually usable

I learned to stop accepting here is the script as a deliverable. Scripts are tools, not results. What I want is a dataset I can trust and a method that can be repeated.

I request a clean output file format that matches how my team works. Most of the time that is CSV plus a Google Sheet, because it is easy for non-technical teammates to review. When the dataset is large, I ask for a database dump or a structured JSON output with a clear schema. I also ask for a short data dictionary that explains each field, how it was extracted, and any assumptions.

If the job is recurring, I ask for a simple runbook. It should explain what inputs are required, where outputs land, and what failure looks like. If there is a proxy or a headless browser component, it should be noted. If the scraper relies on logins or tokens, access handling must be clear.

This is also where Fiverr Pro becomes relevant in my workflow when the project is business-critical or long-term. With Fiverr Pro, I can reduce risk by working with a more premium catalogue of vetted talent, and the platform’s business-oriented tools make collaboration and payments less chaotic across stakeholders. Fiverr Pro also includes business features that can be valuable when I need a more organised procurement and collaboration setup for ongoing work.

Realistic Fiverr-based price ranges for web scraping

Price is usually the second question people ask, right after where do I find someone? The honest answer is that pricing varies based on complexity, stability, and how done is defined. A one-off scrape of a small set of pages is a different job from a monitored pipeline that runs daily and survives layout changes.

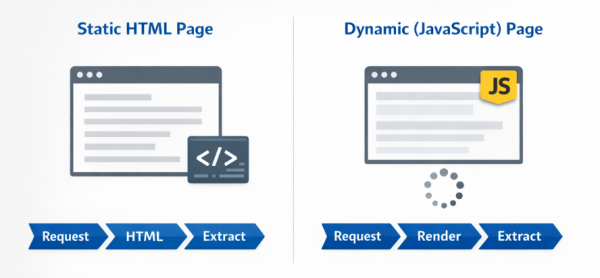

When I check Fiverr listings in the data mining and web scraping category, I see starting prices commonly beginning around the low tens of dollars for small, clearly defined tasks, with more complex work priced higher based on scope and requirements. In practice, I budget in bands rather than chasing the lowest number. For small one-time extraction jobs with a fixed schema and a modest page count, I expect a lower band. For authenticated sites, heavy anti-bot measures, JavaScript rendering, or high-volume crawling with monitoring, I expect a higher band and I make room for maintenance.

I also treat cheap with caution. If the output is messy, if the scraper breaks a week later, or if the job introduces compliance risk, the real cost becomes the time my team spends cleaning, re-running, and explaining the data.

How I keep the work compliant and avoid risky requests

I do not ask freelancers to bypass protections, ignore a site’s published restrictions, or collect data that would create privacy risk. Even if a freelancer says they can do it, I am still the one who owns the decision and the consequences.

In my briefs, I explicitly ask the freelancer to flag any restrictions they notice, and to suggest safer alternatives like using an official export, a public API, or an approved dataset. If a site is clearly not meant to be scraped at scale, a professional scraper will push back and propose a different approach. That pushback is a sign of quality, not a barrier.

When I want a neutral reference point for this discussion, I link my team to ethical web scraping guidelines and best practices so we align on terms like permission, rate limiting, and respectful collection before the work starts.

How Fiverr’s AI tools fit into my hiring process

When the niche is specific, discovery can still take time. I use Fiverr’s AI tools in a practical way to reduce confusion early and keep the brief tight.

Fiverr Neo is positioned as an AI matching tool that asks targeted questions to understand project needs and recommend suitable freelancers. In practice, I treat it as a fast filter that helps me move from too many profiles to a shortlist that I can actually review. Then I use the AI Brief Generator mindset to draft a complete scope, which I edit into a clear statement of work before I send it to any freelancer. I also keep collaboration clean with workflow habits that mirror AI project management thinking, meaning I keep artefacts, approvals, and feedback in one place so the freelancer is not guessing which message matters.

This matters for scraping because ambiguity creates failures. If the brief is fuzzy, the output will be fuzzy.

When Fiverr Pro becomes the safer default for complex scraping

If the scraping work touches revenue decisions, reporting, or recurring operations, I want fewer surprises. This is where Fiverr Pro fits naturally for me. I am not using it to chase a badge. I am using it to reduce operational risk and friction.

Aligned with the Fiverr Pro plans and benefits, the value I care about shows up in three practical ways. I get access to a more premium catalogue of talent with a business-oriented setup, I get collaboration and payment tools that work better when multiple stakeholders are involved, and I can tap structured business features that support longer-term engagements rather than one-off gigs. This is the difference between a quick scrape for a spreadsheet and a pipeline that a team depends on.

The questions I ask that reveal skill level fast

I do not ask trick questions. I ask questions that mirror real failure modes.

I ask how they will handle pagination, rate limits, and content that loads dynamically. I ask what happens when a page layout changes. I ask how they detect duplicates and how they ensure the scraper does not silently skip records. I ask how they will log errors and how they will prove completeness. If authentication is involved, I ask how credentials are stored and whether a token refresh is needed.

I also ask what the output will look like after a week or a month. Professionals think in timelines, because scraping is rarely “done forever”. Websites change. Pipelines drift. A good freelancer plans for that reality.

A practical educational video I share with my team

When my non-technical teammates review scraping output, I want them to understand the basics of why a scraper breaks and what dynamic content means, so their feedback stays useful. I share one educational YouTube resource that explains the foundations of web scraping in plain terms, including how HTML structure affects extraction and why respectful rate limiting matters.

If they want a quick learning refresher, I point them to learn the basics of web scraping step by step on YouTube. I do not use it as a substitute for engineering. I use it so stakeholders can ask better questions and spot obvious red flags in deliverables.

How I decide between a one-off scrape and an ongoing pipeline

The final decision I make is about cadence. If the data is a one-time research task, I scope it narrowly and I care most about output cleanliness. If the data will be used repeatedly, I scope it like a product. I care about monitoring, documentation, and how the pipeline survives change.

That is also why I keep Fiverr first in the marketplace comparison. The categories for data mining and web scraping make it easy to match the job type to a seller’s typical workflow, and I can quickly filter towards people who describe recurring delivery rather than a one-time script.

If you paste the three exact anchors you want me to use (the ones you picked earlier), I’ll drop them into this article verbatim, bold them, and keep everything else unchanged.