How Developers and Businesses Choose Modern AI Model APIs

February 9, 2026Image to Video AI Free: How to Convert Photos into Videos Online

February 9, 2026

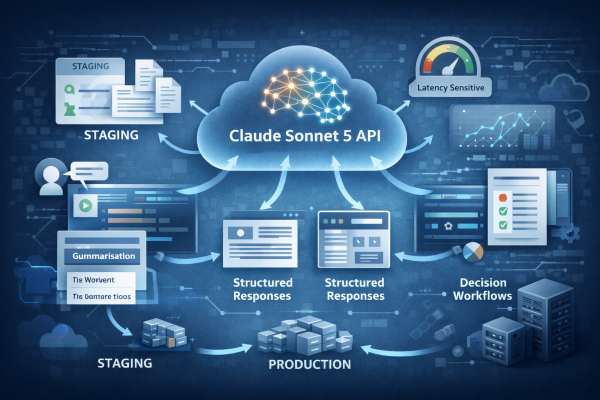

Modern software products increasingly rely on intelligence that adapts, reasons, and improves over time, rather than static rules coded once and left untouched. As teams ship features that depend on language understanding, code generation, summarization, and reasoning, AI is no longer treated as an add-on feature. It is now part of the same foundational layer as databases, cloud computing, and identity systems. This shift has pushed companies away from packaged AI tools toward programmable model interfaces that can evolve alongside products. Claude sonnet 5 illustrates how modern AI APIs fit naturally into this infrastructure mindset, where intelligence is accessed on demand and scaled like any other system dependency.

The Shift from AI Tools to AI APIs

Early AI adoption in software followed a familiar pattern. Teams purchased tools that promised ready-made intelligence, such as chat widgets, content generators, or automated support systems. These tools worked well for narrow use cases but quickly showed limits once products grew more complex. Integration options were shallow, behavior was difficult to customize, and updates often broke workflows that teams relied on.

AI APIs changed this dynamic by exposing intelligence as a building block rather than a finished product. Instead of adapting the product to a tool, teams adapt the model to the product. Developers can shape prompts, control context, manage latency, and combine outputs with internal data. This mirrors how cloud infrastructure replaced monolithic software installations, offering flexibility without locking teams into rigid interfaces.

In practice, this shift means AI capabilities are designed into product architecture from the start. A recommendation system, onboarding assistant, or developer helper is no longer a bolt-on service. It is a function backed by a model endpoint, versioned, monitored, and tested like any other core dependency.

Infrastructure Thinking in AI Adoption

When AI becomes infrastructure, teams start asking different questions. The focus moves from novelty to reliability. Product leaders care about consistency across releases, predictable costs, and clear failure modes. Engineers care about observability, graceful degradation, and how models behave under real user load.

This mindset mirrors how companies evaluate databases or messaging queues. No team would choose a data store without considering uptime, scaling behavior, and long-term support. The same logic now applies to AI models. Infrastructure thinking also encourages abstraction layers, where the application logic is separated from any single provider, making future changes less disruptive.

Another critical aspect is governance. Infrastructure-level AI must align with security, compliance, and data handling standards. Logs, audit trails, and access controls become as important as model accuracy. Treating AI APIs as infrastructure forces organizations to mature their processes rather than relying on experimentation alone.

How Claude Sonnet 5 Supports Scalable Products

Scalable products need models that balance reasoning quality with performance. In many real-world systems, AI is called thousands or millions of times per day, often in latency-sensitive contexts. A model that produces excellent results but introduces unpredictable delays quickly becomes a bottleneck.

Claude Sonnet 5 fits naturally into products that require consistent reasoning across varied tasks. Teams use it for summarizing user input, generating structured responses, and supporting decision workflows that evolve over time. Because it is accessed through an API, it can be versioned and tested in staging environments before being rolled out to production.

From a product perspective, this enables incremental improvement. Teams refine prompts, adjust context windows, and add safeguards without rewriting the feature. Over time, the model becomes part of the product’s operational fabric, responding predictably as usage scales.

Enterprise Use Cases for Claude Opus 4.6

Large organizations often face a different set of challenges. Their AI systems must process long documents, reason across complex inputs, and support workflows that span departments. In these environments, context length and reasoning depth matter more than raw speed.

Claude Opus 4.6 is frequently positioned for these enterprise scenarios because it can handle dense information without losing coherence. Teams use it for contract analysis, policy review, internal knowledge synthesis, and multi-step reasoning tasks. These are not experimental features but operational workflows that employees depend on daily.

Accessing such capabilities through an API allows enterprises to embed intelligence directly into internal systems. Rather than asking staff to use separate AI tools, organizations integrate reasoning into document management systems, analytics platforms, and collaboration software. This reduces friction and ensures AI outputs align with existing processes and controls.

GPT 5.3 Codex and Developer Productivity

Developer productivity is one of the clearest examples of AI APIs becoming infrastructure. Coding assistance, test generation, and code review are now expected parts of modern development environments. These capabilities must integrate seamlessly with editors, version control systems, and CI pipelines.

GPT 5.3 Codex is commonly used in these contexts because it supports programmatic code understanding and generation. Teams embed it into internal tools that suggest implementations, flag potential issues, or generate documentation. Because it is accessed via an API, these features can be tuned to match a team’s coding standards and project structure.

The infrastructure angle becomes clear when these tools move from optional helpers to essential workflow components. When builds, reviews, or deployments depend on AI-generated insights, reliability and predictability matter as much as accuracy. AI APIs that support this level of integration become part of the development stack, not just a convenience.

Evaluating APIs for Long-Term Reliability

As AI APIs take on infrastructure roles, evaluation criteria expand beyond model quality. Teams examine how providers handle updates, deprecations, and versioning. They look for clear communication around changes and mechanisms to test new versions safely. Cost transparency also becomes critical, especially when usage scales with user growth.

Long-term reliability includes understanding how models behave under edge cases and failure conditions. Infrastructure-grade AI should degrade gracefully, returning partial results or fallback responses rather than breaking user experiences. Monitoring tools that expose latency, error rates, and output patterns help teams maintain trust in these systems.

Ultimately, the move from tools to infrastructure reflects maturity in AI adoption. Teams that treat AI APIs as core dependencies build products that adapt more easily to change. They are better positioned to swap models, refine workflows, and meet evolving user expectations without architectural upheaval. This approach turns AI from a novelty into a durable part of the software foundation, supporting products as they grow in complexity and reach.