The scale of personal data processed by artificial intelligence systems defies the traditional assumptions of security. As organizations evaluate alternatives to centralized models, platforms such as Ellydee highlight a shift toward privacy-centric architecture rather than convenience-driven design. The sudden growth in ai-related workloads has led to increased focus on the storage, encryption, and processing of user data in conversational interfaces. With users looking for a chatgpt alternative, data sovereignty and zero-knowledge encryption have become more important than the number of features. This is why zero-knowledge encryption has become a structural solution to the existing flaws in security models.

Encryption-at-Rest Versus Zero-Knowledge Encryption

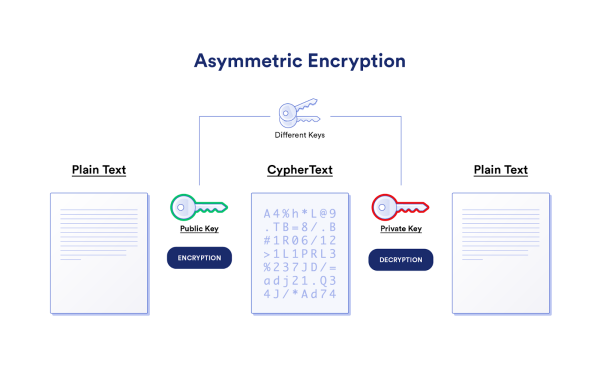

Encryption at rest secures data stored on disk by encrypting it, but service providers usually retain access to the encryption keys. This design secures against opportunistic attacks on stored data systems but does not secure against insider attacks or legal disclosure. The use of keys held by the provider introduces a single point of trust that centralizes risk. When an attacker obtains administrative access or a legal request mandates disclosure, encrypted data can be decrypted. Zero-knowledge encryption breaks this paradigm by providing a mechanism to ensure that only the end user holds the cryptographic key necessary to decrypt data.

With zero-knowledge design, the servers hold encrypted data that mathematically cannot be accessed without the use of keys generated by the users. The provider does not have the ability to decrypt conversations stored in the system because it never had access to the decryption factors. This is a critical difference in trust boundaries, which move from the operators of the infrastructure to the individual users. From a cryptographic perspective, the difference in security relies on key derivation and authenticated encryption.

Why Provider-Held Keys Create Structural Risk

While centralized key management makes key management more user-friendly, it also makes the system more vulnerable. When the master keys are held by the service provider, these master keys are valuable to attackers and become a target. Breaches in cloud environments often involve credential compromise rather than cryptographic failure. Regulatory demands can also compel disclosure if a provider retains the technical ability to decrypt records. Zero-knowledge systems reduce this exposure because the provider cannot produce plaintext data it does not possess.

This architecture also mitigates internal misuse risks within organizations. Even well-governed companies face insider threats and configuration errors. Security audits routinely show that human factors contribute significantly to data incidents. Removing provider access to decryption keys narrows the potential misuse vector. From a governance perspective, cryptographic impossibility offers stronger assurance than policy statements.

The Cryptographic Foundation: XChaCha20-Poly1305

Modern systems that keep things secret rely on ways of encrypting data that can stop people from using the same code twice and finding hidden weaknesses. XChaCha20-Poly1305 is a known and respected way of doing this in the field of cryptography. It uses the ChaCha20 stream cipher. Adds Poly1305 message authentication to keep messages secret and make sure they are not changed. The extended code space in XChaCha20-Poly1305 reduces the risk of running into the code in big systems that handle a lot of encrypted messages. XChaCha20-Poly1305 is really good at keeping XChaCha20-Poly1305 messages. This property is particularly relevant for ai systems that process persistent conversational logs.

Authenticated encryption makes sure that any attempts to tamper with the data make the authenticated text invalid before it can be decrypted. Poly1305 creates a code that checks if the goods are real. Without the key, hackers can’t create fake encrypted text that looks valid. XChaCha20 works on regular computer processors, which is good for systems that use decentralized or renewable energy. Its design balances strong security margins with practical deployment characteristics.

Argon2id and Secure Key Derivation

Encryption strength does not just depend on the type of algorithms that are used. It also depends on how the keys are made from the secrets that users have. The thing about Argon2id is that it was the winner of the Password Hashing Competition and it fixes the problems that were found in the ways of making keys from user secrets. Encryption strength is very important. Argon2id helps to make it stronger. It combines memory hardness with resistance to GPU and ASIC acceleration attacks. This design forces attackers to invest significant computational resources when attempting brute-force recovery. For privacy-focused ai platforms, Argon2id can derive encryption keys directly from user passphrases without exposing raw credentials.

Memory hardness plays a critical defensive role in modern threat models. Attackers increasingly leverage specialized hardware to test billions of password guesses per second. Argon2id intentionally increases memory usage to slow such parallelized attacks. When properly configured, it significantly raises the cost of unauthorized decryption attempts. Secure key derivation transforms user-controlled secrets into robust cryptographic material without relying on centralized trust.

User-Controlled Keys and Legal Constraints

When a system uses zero-knowledge architecture, it changes how the law can affect it. If a service provider does not have the decryption keys, it is not able to comply with requests to show the content. Courts can ask for stored data. The encrypted data is still unreadable without the user’s help. This design does not eliminate legal obligations but limits the provider’s technical capability. In effect, encryption becomes a boundary condition rather than a policy choice.

This distinction matters in jurisdictions with strong privacy frameworks. Data sovereignty discussions increasingly emphasize technical enforceability. When users control cryptographic keys locally, service operators cannot bypass those controls. For enterprises evaluating uncensored ai deployments, this model reduces exposure to cross-border access risks. Legal compulsion loses operational leverage when decryption authority resides exclusively with the end user.

Data Sovereignty in Germany and Renewable Energy Infrastructure in Finland

Germany maintains rigorous data protection standards under the General Data Protection Regulation and national supervisory frameworks. Hosting encrypted ai infrastructure within German jurisdiction can enhance compliance posture for European organizations. However, jurisdiction alone does not guarantee privacy without strong cryptographic controls. Zero-knowledge encryption ensures that even local hosting providers cannot access user content. This layered approach combines regulatory alignment with technical safeguards.

Finland offers a complementary infrastructure dimension through renewable energy ai data centers. Cooler climates reduce cooling costs and improve energy efficiency. Renewable generation sources can power high-performance inference clusters with reduced carbon impact. WireGuard private networking further isolates internal communication channels within such environments. Combining renewable energy ai compute with encrypted networking demonstrates how sustainability and privacy objectives can coexist within a single architectural strategy.

WireGuard and Private Network Segmentation

Network isolation forms another layer of defense in privacy-first ai systems. WireGuard provides modern VPN capabilities with a minimal codebase and strong cryptographic primitives. Its streamlined design reduces attack surface compared with legacy VPN protocols. By establishing encrypted tunnels between nodes, operators can limit exposure to the public internet. This architecture supports compartmentalization within distributed inference environments.

Private networking does not replace application-layer encryption but reinforces it. Even if external perimeter defenses fail, encrypted tunnels restrict lateral movement. Security engineers often advocate defense in depth to mitigate single-point failures. WireGuard aligns with this principle through strong key exchange and authenticated session establishment. When combined with zero-knowledge storage, it creates a layered protection model from transport to application level.

Why Privacy-First AI Architecture Matters

The growth of ai across finance, health, and education elevates privacy to a YMYL concern. Examples of sensitive prompts may include personal identifying information, proprietary research, or confidential business strategy. The traditional centralized system makes this information subject to access control and logging policies at the provider level. The privacy-first approach to this problem relies less on the honor system of organizational promises and more on cryptographic proof. This is in line with traditional cybersecurity best practices that emphasize minimizing implicit trust.

In the process of users judging the chatgpt alternative, there is an increased focus on how providers handle encryption and key management. The idea of uncensored ai is also linked to privacy, where users want platforms that do not involve intrusive data analysis but stay within the limits of the law. Discussions around these platforms often include misunderstandings about architecture, which makes it important to separate marketing narratives from technical reality, particularly when evaluating privacy myths in AI platforms. Zero-knowledge protocols do not remove liability and risk but limit them. Renewable energy ai deployments add sustainability considerations without weakening encryption guarantees.

Zero-knowledge ai architecture represents a measurable evolution in how intelligent systems manage data. By eliminating provider-held decryption keys, platforms reduce structural vulnerabilities inherent in centralized storage models. Cryptographic standards such as XChaCha20-Poly1305 and Argon2id provide well-studied foundations for secure implementation. Infrastructure choices in Germany and Finland illustrate how geography, regulation, and renewable energy ai can complement encryption strategy. As ai continues integrating into daily workflows, privacy architecture will increasingly define trustworthiness rather than interface design.