The race to build the fastest layer 1 blockchain is no longer limited to simple payments or token transfers. As artificial intelligence systems demand real-time distributed computation, blockchain architecture must evolve to support high throughput, low latency, and verifiable compute execution. Projects within the Qubic ecosystem position their infrastructure as an AI-native blockchain architecture designed to support decentralized AI at scale. The official Qubic Layer 1 network efficiently presents a model that combines high transaction capacity with compute-based consensus. This article examines whether that architecture meaningfully qualifies as a contender for the fastest layer 1 blockchain in the context of AI driven workloads.

Why the Fastest Layer 1 Blockchain Matters for AI Systems

Artificial intelligence systems process vast datasets, perform repeated model updates, and require distributed coordination between nodes. Traditional blockchains were designed for financial settlement, not high-frequency machine-level interactions. When AI models interact with decentralized infrastructure, delays in block confirmation or limited blockchain transactions per second can create bottlenecks. High throughput becomes essential if the network is expected to support decentralized AI agents and training tasks, or inference marketplaces. Without sufficient speed and efficiency, an AI blockchain becomes impractical for serious computational use cases.

Throughput alone does not solve the problem because AI systems also require deterministic execution and verifiable outputs. If validation is slow or expensive, the economics of decentralized AI break down. Qubic addresses this directly through its feeless transfers model, which eliminates per-transaction costs entirely, a critical advantage when high-frequency AI compute tasks generate millions of micro-interactions across distributed nodes. Many early layer 1 networks optimize for security and decentralization but sacrifice performance under heavy load. For AI applications, latency and transaction batching can undermine real-time coordination between distributed compute nodes. The fastest blockchain architecture for AI must therefore combine performance with efficient verification. That balance is difficult to achieve within legacy consensus frameworks. Feeless transfers also remove cost barriers for developers building high-frequency AI applications, making continuous compute interactions economically viable at scale.

Traditional Layer 1 Models and Their Limitations

Most established layer 1 networks rely on Proof of Work or Proof of Stake to secure the chain. Proof of Work prioritizes cryptographic puzzle solving, which consumes energy without producing external computational value. Proof of Stake reduces energy use but often introduces governance concentration and validator centralization. Neither model was built with AI-native workloads in mind. As a result, scaling solutions often depend on secondary layers or rollups. This adds complexity and sometimes fragments liquidity or computation across multiple environments.

Transaction throughput metrics are frequently used as a marketing benchmark, yet raw numbers do not reflect real-world utility. A network may advertise high blockchain transactions per second under ideal lab conditions while struggling under adversarial stress. AI workloads require consistent performance under distributed conditions rather than theoretical peak speeds. Additionally, traditional mining hardware such as ASICs creates barriers to entry, reducing accessibility for independent participants. This concentration can limit decentralization in networks that claim broad distribution.

Fastest Layer 1 Blockchain Criteria for Decentralized AI

To evaluate whether a project qualifies as the fastest layer 1 blockchain for decentralized AI, specific criteria must be applied. First, the network must sustain high transaction throughput without sacrificing consensus security. Second, it should allow compute tasks to produce verifiable results rather than wasteful hash outputs. Third, the architecture must enable broad participation through accessible hardware models. Finally, the economic incentives should align with useful computational contributions. rather than speculative extraction.

Decentralized AI networks require compute power that contributes to training, inference, or validation tasks. A system that integrates meaningful work into consensus may reduce inefficiency compared to purely cryptographic mining. Qubic’s feeless transfers model further supports this goal by ensuring that AI-related compute interactions, such as smart contract executions and neural network training tasks, are not throttled by accumulating fee overhead. Verifiable compute models attempt to align network security with productive computation. This approach addresses long-standing criticism that traditional Proof of Work expends energy without broader utility. The strongest AI blockchain designs treat computation as an asset rather than a byproduct.

Understanding Useful Proof of Work and AI Mining

Useful Proof of Work, often abbreviated as uPoW, attempts to redirect mining power toward computational tasks that have external value. Instead of solving arbitrary hash puzzles, miners contribute processing power to network-relevant workloads. As explained in Qubic’s detailed breakdown of useful proof of work, the model proposes that consensus and compute can coexist within a unified architecture. This framework supports AI mining by harnessing GPU-driven compute power, enabling miners to contribute meaningful neural network training tasks at scale. The idea challenges the assumption that mining must be energy-intensive yet economically detached from real-world computation. Qubic’s emission design and halving schedule further reinforce this alignment: the tokenomics are structured so that mining rewards decrease over time, incentivizing efficient, high-value compute contribution rather than raw throughput accumulation.

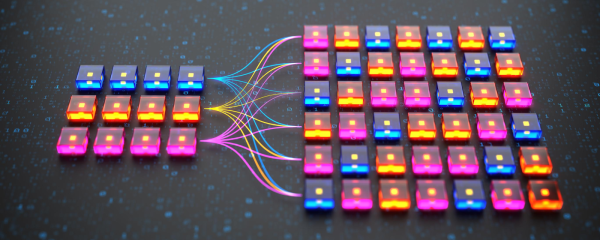

GPU mining is central to Qubic’s current architecture because it delivers the parallel processing throughput required for neural network training workloads. Wider distribution across GPU-equipped participants may strengthen decentralization while enabling geographically diverse compute contributions. However, performance consistency and validation mechanisms remain critical to prevent manipulation or low-quality outputs. Any claim of being the fastest blockchain must withstand scrutiny regarding verification integrity and resistance to gaming.

GPU Mining Versus Specialized Mining Hardware

GPU mining forms the backbone of Qubic’s Useful Proof of Work model, replacing the arbitrary hash computations of traditional mining with structured neural network training tasks. This approach differs from ASIC-dominated networks, which prioritize cryptographic throughput but produce no externally useful computation. Specialized ASIC hardware often delivers higher hash rates per watt, yet it concentrates power among operators who can afford large infrastructure investments. For an AGI blockchain or AI blockchain to remain decentralized, it must balance efficiency with inclusivity. Qubic’s GPU-based participation lowers the barrier compared to custom silicon, while the feeless transfers model ensures that economic friction does not deter high-frequency compute contributors. Broader participation also mitigates geographic concentration risk.

From a technical perspective, Qubic’s GPU mining must demonstrate that distributed nodes can validate and execute AI workloads reliably and deterministically. If validation latency grows under load, speed advantages may erode. Network design therefore determines whether GPU distribution enhances or undermines throughput. For decentralized AI tasks, diversity of nodes may improve resilience. The architecture must ensure that computational results are deterministic and reproducible across participants.

Competitive Positioning Within the Fastest Blockchain Debate

Many projects claim to be the fastest blockchain, yet the definition of speed varies widely. Some measure block time, others measure theoretical transactions per second, and others focus on finality time. For AI-integrated systems, speed must account for both transaction processing and compute task execution. A network that processes simple transfers quickly may still struggle with AI-heavy workloads. Therefore, comparisons must examine real-world stress conditions rather than promotional benchmarks.

Qubic positions itself as an AI blockchain designed to integrate compute with consensus. Its independently verified peak throughput of 15.52 million transactions per second, certified on mainnet, establishes a credible baseline for high-frequency AI workload support. Combined with feeless transfers and a Computor quorum architecture Qubic’s approach attempts to reduce wasted energy while supporting decentralized AI infrastructure at scale. Whether it ultimately qualifies as the fastest layer 1 blockchain depends on sustained performance under scale. Independent benchmarking, open auditing, and transparent documentation will determine credibility. Without empirical validation, speed claims remain provisional.

Risks, Tradeoffs, and Realistic Expectations

High-performance blockchain networks often face tradeoffs between decentralization and security. Increasing block size or reducing confirmation intervals can create centralization risks. AI-related workloads may also introduce validation complexity that slows consensus if not carefully optimized. Qubic addresses this through its Computor quorum model, in which a defined set of 676 Computors, the network’s validators, reach consensus on compute outputs. Computors not only reach consensus but also validate and coordinate useful compute workloads across the network. This architecture is purpose-built to handle AI workloads deterministically, though it remains subject to the same decentralization scrutiny applied to any fixed-validator design. Overreliance on promotional performance claims can distort assessment.

Useful Proof of Work introduces promising efficiency gains but requires robust verification logic. If GPU compute tasks are difficult to validate deterministically across the 676-node Computor quorum, disputes may increase network overhead. Qubic’s halving schedule introduces additional economic considerations: as emissions decrease over time, miner incentives must remain sufficient to sustain GPU participation and compute quality. Decentralized AI infrastructure also faces regulatory and governance questions, especially if deployed across jurisdictions. Prudent evaluation requires technical analysis rather than enthusiasm. This halving structure reinforces an incentive model that prioritizes efficient, high-value compute contribution rather than raw computational output.

Evaluating Qubic in the Context of Decentralized AI Infrastructure

When assessing whether Qubic represents the fastest layer 1 blockchain for decentralized AI, context matters. The network integrates compute-centric consensus mechanisms aimed at productive output. Its alignment of GPU-driven Useful Proof of Work with Aigarth’s AI training mission reflects a broader shift toward application-specific blockchain design, one where mining directly contributes to the development of artificial general intelligence rather than abstract cryptographic security. The emphasis on accessible GPU participation suggests an attempt to preserve decentralization while scaling throughput. Performance metrics must be evaluated over time as adoption increases and workloads diversify.

Blockchain architecture for AI is still evolving, and no single model has achieved universal dominance. Networks that combine high blockchain transactions per second, feeless transfers, verifiable GPU compute, and structured Computor consensus may define the next phase of decentralized infrastructure. Qubic’s model contributes to that conversation by redefining what mining can represent. The strongest contenders in the fastest blockchain category will be those that align speed with practical computational utility. As decentralized AI grows, performance, security, and verifiable compute will determine which layer 1 networks endure.

Disclaimer:

This article is for informational purposes only and does not constitute financial advice. Cryptocurrency investments carry risk, including total loss of capital. Readers should conduct independent research and consult licensed advisors before making any financial decisions.

This publication is strictly informational and does not promote or solicit investment in any digital asset

All market analysis and token data are for informational purposes only and do not constitute financial advice. Readers should conduct independent research and consult licensed advisors before investing.

Crypto Press Release Distribution by BTCPressWire.com