CometAPI: Powering Next-Generation AI Innovation with Advanced Models

February 9, 2026Why AI Model APIs Are Becoming Core Infrastructure

February 9, 2026

Modern software teams are building products in an environment where AI capabilities are no longer experimental but foundational to how applications work in production. Choosing the right model API affects performance, reliability, cost control, and how quickly teams can move from prototype to stable deployment. For many developers, Claude sonnet 5 represents a practical entry point because it reflects how general purpose AI models are now used across real products rather than isolated demos. Businesses evaluating AI platforms are no longer asking whether to use AI but which model architecture aligns with their operational goals and risk tolerance. This shift has turned model selection into a strategic decision rather than a purely technical one.

Why AI Model APIs Matter Today

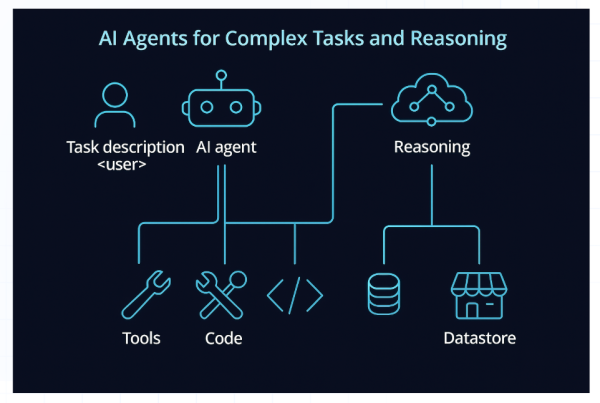

AI model APIs have become core infrastructure in the same way databases and cloud computing once did. Instead of building intelligence from scratch, teams consume advanced reasoning, language understanding, and generation capabilities through stable interfaces. This abstraction allows companies to focus on product logic while relying on continuously improved models underneath.-purpose

From a developer perspective, APIs standardize access to complex systems that would otherwise require specialized research teams. Versioned endpoints, predictable latency profiles, and transparent pricing models make it possible to plan releases with confidence. For businesses, APIs reduce long-term risk because models can be swapped or upgraded without rewriting entire systems.

The importance of AI model APIs also lies in how they scale. Early-stage teams might start with simple use cases such as summarization or classification, while mature organizations extend the same APIs into decision support systems, internal tooling, and customer-facing workflows. A well-chosen model API supports this growth without forcing major architectural changes.

Another factor driving adoption is governance. APIs allow organizations to centralize usage, enforce access controls, and monitor performance. This level of visibility is critical for companies operating under compliance or data handling constraints, where uncontrolled experimentation could introduce operational risk.

Understanding Developer and Business Requirements

Developers and businesses often approach model selection from different angles, but successful teams align these perspectives early. Developers tend to prioritize usability, documentation quality, response consistency, and how well a model handles edge cases. Businesses focus on cost predictability, scalability, vendor stability, and alignment with long-term product strategy.

A common mistake is choosing a model based solely on benchmark scores or early hype. In practice, what matters is how a model behaves under real workloads. Developers look for predictable outputs, controllable prompting behavior, and minimal surprises when inputs vary. A model that performs well in controlled tests but degrades in production can quickly erode trust.

Businesses, on the other hand, evaluate how models fit into existing workflows. This includes how easily usage can be audited, whether billing aligns with forecasted growth, and how updates are communicated. A technically strong model that introduces pricing volatility or unclear version changes can create friction at the organizational level.

The most effective decision frameworks combine these concerns. Teams define core use cases, test candidate models with representative data, and evaluate results against both technical and business criteria. This process reduces the risk of choosing a model that excels in isolation but fails to support broader goals.

How Claude Sonnet 5 Fits General AI Workflows

General-purpose AI workflows require a balance between capability and efficiency. Many applications do not need maximum reasoning depth for every request but do require consistent performance across a wide range of tasks. Claude Sonnet 5 fits this category by supporting text understanding, generation, and reasoning without excessive overhead.

In practical terms, this makes it suitable for features such as content transformation, conversational interfaces, and internal productivity tools. Developers can integrate a single model across multiple parts of an application rather than managing a complex mix of specialized endpoints. This simplicity reduces maintenance effort and lowers the cognitive load on teams.

Another reason general models are preferred is iteration speed. When product requirements evolve, teams can adapt prompts and workflows without switching underlying models. This flexibility is especially valuable in early product stages, where user feedback often reshapes feature scope.

From a business standpoint, general-purpose models support predictable scaling. Usage patterns are easier to forecast because workloads are less fragmented. This predictability helps finance and operations teams plan budgets without needing constant adjustments as features expand.

When Claude Opus 4.6 Is Preferred for Complex Tasks

Not all AI workloads are equal. Some tasks involve long context windows, intricate reasoning chains, or nuanced interpretation of dense information. In these scenarios, Claude opus 4.6 is often evaluated because it is designed to handle complexity more effectively than lighter models.

Enterprise use cases frequently fall into this category. Legal analysis, technical documentation review, and multi-step decision support systems benefit from models that can maintain coherence across large inputs. Developers working on such systems need confidence that the model will not lose context or produce inconsistent conclusions halfway through a process.

Choosing a more capable model for these tasks is not about maximizing intelligence everywhere but about allocating resources where they matter most. Teams often reserve high-capability models for critical paths while using lighter models for peripheral features. This hybrid approach balances cost with reliability.

Businesses also consider reputational and operational risk. When AI outputs influence important decisions, tolerance for error decreases. A model that demonstrates stable reasoning under load becomes a safer choice, even if it comes with higher usage costs. This tradeoff is evaluated carefully in regulated or high-impact environments.

Where GPT 5.3 Codex Excels in Technical Work

Software development presents a distinct set of challenges for AI models. Code generation, refactoring, and understanding large codebases require structural awareness rather than purely linguistic fluency. gpt 5.3 codex is often considered in technical contexts because it is optimized for programming-related tasks.

Developers integrating AI into engineering workflows look for models that understand syntax, respect project conventions, and produce compilable outputs. Technical models are evaluated not just on correctness but on how well they align with existing code styles and patterns. This reduces the time spent correcting AI generated output.

In practice, technical models are used in code review assistance, automated testing suggestions, and internal developer tools. These applications benefit from a model that can reason about dependencies and project structure. General language models can struggle in these areas, especially as projects grow in size.

From a business perspective, improving developer productivity has a measurable impact. Faster iteration cycles and reduced cognitive load translate into shorter delivery timelines. Investing in a model optimized for technical work supports these outcomes without forcing teams to compromise on code quality.

Choosing the Right Model for Long-Term Scaling

Long-term scaling is where many early AI decisions are tested. A model that works well for a small user base may reveal limitations as traffic increases or use cases diversify. Teams planning for growth evaluate models not just on current needs but on how they adapt over time.

One key consideration is model evolution. APIs that offer clear versioning and backward compatibility allow teams to upgrade safely. Sudden behavioral changes can disrupt production systems and erode user trust. Developers value providers that communicate updates transparently and offer migration guidance.

Cost structure is another factor. Usage-based pricing must align with expected growth patterns. Teams often model different scenarios to understand how costs scale with increased adoption. A model that appears affordable at low volume can become unsustainable if pricing does not scale smoothly.

Finally, organizational learning plays a role. As teams gain experience with a model, they develop internal best practices and tooling around it. Switching models later can incur hidden costs in retraining and workflow changes. This makes early decisions particularly important, even when flexibility exists.

Choosing modern AI model APIs is ultimately about aligning technical capability with organizational goals. Developers seek reliability and clarity, while businesses focus on sustainability and risk management. When these priorities are considered together, model selection becomes a strategic advantage rather than a recurring challenge.